Specifically, we're going to look whether you should install Docker through Docker for Windows or Minikube AND whether you should use Minikube, Kind, or K3s for Kubernetes.

Best way to learn docker and kubernetes how to#

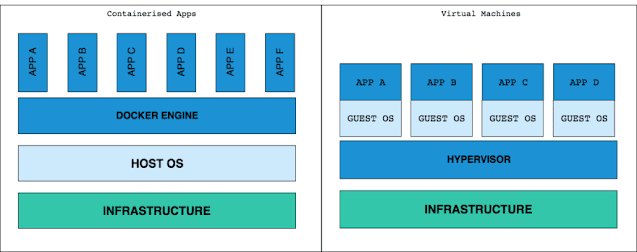

Today, you're going to determine which combination is best for you and get up to speed on how to install it. It depends on your hardware and operating system. So how do we choose the right Virtual Machine? This will let every Linux application or executable run inside a container in the virtual machine. You could create a Virtual Machine that runs Linux on your Windows host. That's because containers expect to use the kernel of the operating system they're designed for.Ī Linux executable expects a Linux host, and there's no way to run it on Windows! Unfortunately, you can't run Linux containers on a Windows host or vice versa. However, the majority of the time, when people say containers, they refer to Linux containers. So what's the problem with getting both of these tools on Windows?Ĭontainers come in two flavours: Windows and Linux containers. You'll also learn which setup is the best with regards to your machine.ĭocker and Kubernetes are two popular tools to run containers at scale. If your workloads grow slowly and monotonically, it may be enough to monitor the utilisations of your existing worker nodes and add an additional worker node manually when they reach a critical value.TL DR : In this article you learn how to install the necessary tools to run Docker & Kubernetes on Windows 10: Docker for Windows, Minikube, Kind, and K3s. However, if your workloads do not vary so much, it may not be worth to set up the Cluster Autoscaler, as it may never be triggered. In such scenarios, the Cluster Autoscaler allows you to meet the demand spikes without wasting resources by overprovisioning worker nodes. Using the Cluster Autoscaler makes sense for highly variable workloads, for example, when the number of Pods may multiply in a short time, and then go back to the previous value. Similarly, when the utilisation of the existing worker nodes is low, the Cluster Autoscaler can scale down by evicting all the workloads from one of the worker nodes and removing it. In this case, the Cluster Autoscaler creates a new worker node, so that the Pod can be scheduled. The Cluster Autoscaler can automatically scale the size of your cluster by adding or removing worker nodes.Ī scale-up operation happens when a Pod fails to be scheduled because of insufficient resources on the existing worker nodes. The Cluster Autoscaler is another type of "autoscaler" (besides the Horizontal Pod Autoscaler and Vertical Pod Autoscaler). The autoscaler profiles your app and recommends limits for it. Please note that if you are not sure what should be the right CPU or memory limit, you can use the Vertical Pod Autoscaler in Kubernetes with the recommendation mode turned on.

Since CPU is a compressible resource, if your container goes over the limit, the process is throttled.Įven if it could have used some of the CPU that was available at that moment. If your process goes over the memory limit, the process is terminated. The scheduler uses those as one of metrics to decide which node is best suited for the current Pod.Ī container without a memory limit has memory utilisation of zero - according to the scheduler.Īn unlimited number of Pods if schedulable on any nodes leading to resource overcommitment and potential node (and kubelet) crashes.īut should you always set limits and requests for memory and CPU? Resource limits are used to constrain how much CPU and memory your containers can utilise and are set using the resources property of a containerSpec. The Liveness probe should be used as a recovery mechanism only in case the process is not responsive. Please note that you should not use the Liveness probe to handle fatal errors in your app and request Kubernetes to restart the app. Consume the endpoint from the Liveness probe.The endpoint always replies with a success response.In other words, not only is the process not serving any requests, but it is also consuming resources. If you don't have a Liveness probe, it stays Running but detached from the Service. However, the Pod is still registered as an active replica for the current Deployment.

When the process is consuming 100% CPU, it won't have time to reply to the (other) Readiness probe checks, and it will be eventually removed from the Service. The Liveness probe is designed to restart your container when it's stuck.Ĭonsider the following scenario: if your application is processing an infinite loop, there's no way to exit or ask for help.

0 kommentar(er)

0 kommentar(er)